Profiling in Production: What’s Slowing You Down?

Profiling in Production: What’s Slowing You Down?

Sara Olson | Marketing Analyst

February 8, 2016

“The site is slow”.

We’ve all been there. The architecture is sound, the production infrastructure rivals the raw computing power of IBM Watson, and of course the site is blazing fast in local development environments. Despite our best efforts at building scalable systems and optimizing for speed, slow code paths can put a damper on an otherwise successful production launch.

There are plenty of proactive approaches to preventing this scenario well before going live:

- Using representative data (number of records, size and representative complexity of records)

- Setting a performance budget and sticking to it, both in architectural decision-making and as part of the QA process Load testing and performance auditing (prior to launch!)

- Preemptive caching, both in the application and at the edge

- Performance analysis as part of a continuous integration suite

Even with adequate attention to site speed during the development and QA cycle, user experience is king. If your production site is slow you’re probably going to hear about it from your users, and you’re going to want some weapons in your arsenal along with a solid strategy to fight the good fight.

Where to Start?

As with any problem, the best place to start is an accurate description of the performance deficit, leading to a reliable and reproducible test-case so that the root cause can be diagnosed and resolved. That involves:

Collecting information: Gather user feedback, get product owner input, quantify “how slow is slow”.

Getting the right level of detail: Which pages are slow? Which users experience the problem? What actions are being performed? Is it consistent or sporadic?

Reproduce the problem: Can you replicate the problem on production with the given parameters? Is it slow in a local environment as well? If not, which environmental or contextual differences might be a factor? Which indicators point to a problem in the application, and which point to infrastructure?

Analysis and resolution: Collect profiling data on production, comparing differences with other environments. Analyze profiling data to pinpoint slow code paths. Work to mitigate the issue locally, and rerun the analysis on production after deploying to confirm. Instrumenting vs. SamplingCode profiling is an essential when it comes to diagnosing your performance problems, but the best approach to profiling might be different depending on your specific scenario and the environment you are analyzing.

During development, instrumented profiling is highly effective due to the accuracy and level of fidelity it provides. Instrumentation in interpreted languages, like PHP and Javascript, involves hooking into the runtime and explicitly collecting information about every function call as is occurs. This allows you to view the elapsed time spent, a call graph of function calls in the stack, memory and CPU stats, and other system data about your code as it executes on each request. Instrumented profiling does add overhead however, and it can be significant; this depends on the complexity of your application.

This makes instrumented profiling ideal for development and debugging sessions, but limited for ongoing production analysis and monitoring. “The site is slow” -- let’s not make it slower!Sample-based profiling involves taking snapshots of an application at fixed intervals. Each snapshot records the currently-executed function(s), and the aggregate of these snapshots is used to approximate when and where time is being spent in your code. This provides much less detail than instrumented profiling but without the significant added overhead, making it ideal for production use.

Samples can be collected over a period of time across many requests, and the aggregate can be analyzed which is especially useful for those performance issues that appear to be random or inconsistent. Although sample is a high-level statistical overview, very often sample-based profiling can quickly point you to the source of your performance woes.ToolingIn almost all cases, you’re going to have a bad time if you try to identify and fix performance issues without the right tools. Assumption Driven Debugging (ADD) is not an effective technique when it comes to pinpointing where time is being spent in your application. This is especially true when working with a framework like Drupal that is doing a lot “behind the scenes” to make our lives easier, since there are so many layers to pull back before you can begin diagnosing the problem.

There are a several excellent commercial options like New Relic that have a free tier to get you started, but you can also install a PHP extension to tap into metrics on your own. Xdebug and XHProf both add profiling capabilities to PHP, and these tools provide functions you can use directly in your code to start and stop profiling during a request. XHProf, combined with the XHProf module for Drupal, is extremely effective for instrumented profiling during development to ensure your code is efficient and your site is optimized for speed. The Drupal module handles enabling the profiler and displaying the output, making it really easy to drill down into the details of your page requests.XHProf also supports profiling in sampling mode, though this mode is not integrated into the XHProf Drupal module.

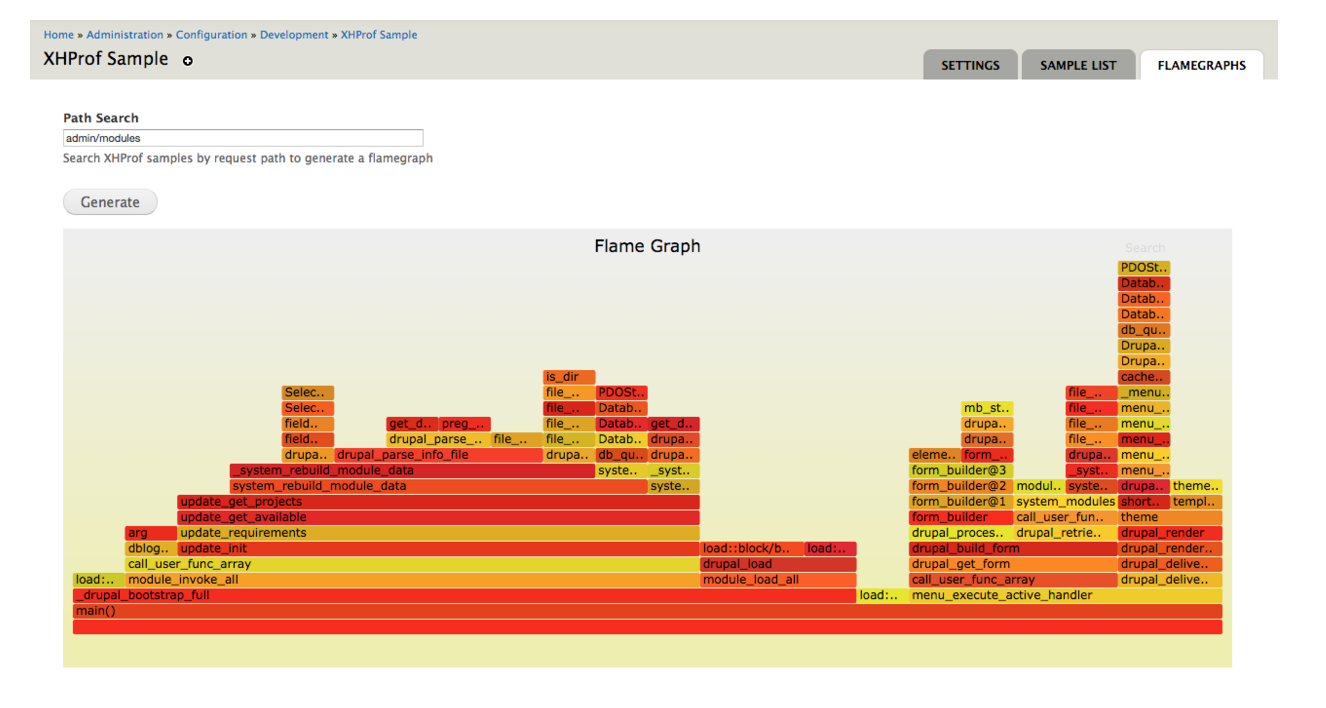

At Phase2, we often rely on XHProf sampling to gather data about the applications we build; Brad Blake talked about this and other profiling tools and techniques last year at All Things Open. Once the data has been collected, it can be fed into tools like Brendan Gregg’s Flame Graph library to paint a very clear picture of where time is being spent. This works really well, but historically required some code changes to enable sample profiling, capture the output, and run a script to generate the flame graph. On production it can also require direct access to the server - a luxury not always available, especially for enterprise and government clients.In order to make sample-based profiling and flame graph generation even easier, automated, and more accessible to developers,

Phase2 developed a set of modules to do the dirty work for you.

XHProf Sample: Similar to the XHProf module, but uses sampling mode to collect data for each request. Settings are available to limit the scope of sampling to specific paths, or “on-demand” through an explicit request header. Enabling this module on a production site allows you to track the requests that have been identified as slow under production- conditions for further analysis, directly in your Drupal site.

XHProf Flamegraph: Builds on the work of Brendan Gregg and Mark Sonnabaum, converting the captured output of XHProf Sample into a pretty flame graph for analysis directly in the Drupal Admin UI.

Flame graphs are a visualization of profiled software, allowing the most frequent code-paths to be identified quickly and accurately. - Brendan Gregg

Each colored bar on the flame graph represents a function execution during a particular request, and the position in the stack shows the ancestry of the call. The width of each bar shows the total time spent in each function call (including other calls in the stack) as measured by the number of samples taken while that function was executing. XHProf has a fixed sampling interval of 0.1s which means that for every 10 samples a function is executing, 1 full second has elapsed.

This makes it incredibly easy to quickly and precisely identify where excessive time is being spent in your application through a user-friendly visual interface. Finding the problem is often more than half the battle - the only thing left to do is fix it! Performance analysis is sometimes viewed as being “difficult” or something that takes hours of tracing and guesswork.

This is certainly true in some cases. Still, it all comes down to being proactive, asking the right questions when problems are discovered, and using the right tools to identify and solve them. The XHProf Sample and XHProf Flamegraph modules work together to lower the barrier to entry for developers, making production profiling and analysis more accessible to everyone.